Give me feedback! :)

Current

- Co-Director at ML Alignment & Theory Scholars Program (2022-current)

- Co-Founder & Board Member at London Initiative for Safe AI (2023-current)

- Manifund Regrantor (2023-current)

Past

- Ph.D. in Physics from the University of Queensland (2017-2022)

- Group organizer at Effective Altruism UQ (2018-2021)

Posts

Wiki Contributions

Comments

It seems plausible to me that at least some MATS scholars are somewhat motivated by a desire to work at scaling labs for money, status, etc. However, the value alignment of scholars towards principally reducing AI risk seems generally very high. In Winter 2023-24, our most empirical research dominated cohort, mentors rated the median scholar's value alignment at 8/10 and 85% of scholars were rated 6/10 or above, where 5/10 was “Motivated in part, but would potentially switch focus entirely if it became too personally inconvenient.” To me this is a very encouraging statistic, but I’m sympathetic to concerns that well-intentioned young researchers who join scaling labs might experience value drift, or find it difficult to promote safety culture internally or sound the alarm if necessary; we are consequently planning a “lab safety culture” workshop in Summer. Notably, only 3.7% of surveyed MATS alumni say they are working on AI capabilities; in one case, an alumnus joined a scaling lab capabilties team and transferred to working on safety projects as soon as they were able. As with all things, maximizing our impact is about striking the right balance between trust and caution and I’m encouraged by the high apparent value alignment of our alumni and scholars.

We additionally believe:

- Advancing researchers to get hired at lab safety teams is generally good;

- We would prefer that the people on lab safety teams have more research experience and are more value-aligned, all else equal, and we think MATS improves scholars on these dimensions;

- We would prefer lab safety teams to be larger, and it seems likely that MATS helps create a stronger applicant pool for these jobs, resulting in more hires overall;

- MATS creates a pipeline for senior researchers on safety teams to hire people they have worked with for up to 6.5 months in-program, observing their compentency and value alignment;

- Even if MATS alumni defect to work on pure capabilities, we would still prefer them to be more value-aligned than otherwise (though of course this has to be weighed against the boost MATS gave to their research abilities).

Regarding “AI control,” I suspect you might be underestimating the support that this metastrategy has garnered in the technical AI safety community, particularly among prosaic AGI safety thought leaders. I see Paul’s decision to leave ARC in favor of the US AISI as a potential endorsement of the AI control paradigm over intent alignment, rather than necessarily an endorsement of an immediate AI pause (I would update against this if he pushes more for a pause than for evals and regulations). I do not support AI control to the exclusion of other metastrategies (including intent alignment and Pause AI), but I consider it a vital and growing component of my strategy portfolio.

It’s true that many AI safety projects are pivoting towards AI governance. I think the establishment of AISIs is wonderful; I am in contact with MATS alumni Alan Cooney and Max Kauffman at the UK AISI and similarly want to help the US AISI with hiring. I would have been excited for Vivek Hebbar’s, Jeremy Gillen’s, Peter Barnett’s, James Lucassen’s, and Thomas Kwa’s research in empirical agent foundations to continue at MIRI, but I am also excited about the new technical governance focus that MATS alumni Lisa Thiergart and Peter Barnett are exploring. I additionally have supported AI safety org accelerator Catalyze Impact as an advisor and Manifund Regrantor and advised several MATS alumni founding AI safety projects; it's not easy to attract or train good founders!

MATS has been interested in supporting more AI governance research since Winter 2022-23, when we supported Richard Ngo and Daniel Kokotajlo (although both declined to accept scholars past the training program) and offered support to several more AI gov researchers. In Summer 2023, we reached out to seven handpicked governance/strategy mentors (some of which you recommended, Akash), though only one was interested in mentoring. In Winter 2023-24 we tried again, with little success. In preparation for the upcoming Summer 2024 and Winter 2024-25 Programs, we reached out to 25 AI gov/policy/natsec researchers (who we asked to also share with their networks) and received expressions of interest from 7 further AI gov researchers. As you can see from our website, MATS is supporting four AI gov mentors in Summer 2024 (six if you count Matija Franklin and Philip Moreira Tomei, who are primarily working on value alignment). We’ve additionally reached out to RAND, IAPS, and others to provide general support. MATS is considering a larger pivot, but available mentors are clearly a limiting constraint. Please contact me if you’re an AI gov researcher and want to mentor!

Part of the reason that AI gov mentors are harder to find is that programs like the RAND TASP, GovAI, IAPS, Horizon, ERA, etc. fellowships seem to be doing a great job collectively of leveraging the available talent. It’s also possible that AI gov researchers are discouraged from mentoring at MATS because of our obvious associations with AI alignment (it’s in the name) and the Berkeley longtermist/rationalist scene (we’re talking on LessWrong and operate in Berkeley). We are currently considering ways to support AI gov researchers who don’t want to affiliate with the alignment, x-risk, longtermist, or rationalist communities.

I’ll additionally note that MATS has historically supported much research that indirectly contributes to AI gov/policy, such as Owain Evans’, Beth Barnes’, and Francis Rhys Ward’s capabilities evals, Evan Hubinger’s alignment evals, Jeffrey Ladish’s capabilities demos, Jesse Clifton’s and Caspar Oesterheldt’s cooperation mechanisms, etc.

Yeah, that amount seems reasonable, if on the low side, for founding a small org. What makes you think $300k is reasonably easy to raise in this current ecosystem? Also, I'll note that larger orgs need significantly more.

I think the high interest in working at scaling labs relative to governance or nonprofit organizations can be explained by:

- Most of the scholars in this cohort were working on research agendas for which there are world-leading teams based at scaling labs (e.g., 44% interpretability, 17% oversight/control). Fewer total scholars were working on evals/demos (18%), agent foundations (8%), and formal verification (3%). Therefore, I would not be surprised if many scholars wanted to pursue interpretability or oversight/control at scaling labs.

- There seems to be an increasing trend in the AI safety community towards the belief that most useful alignment research will occur at scaling labs (particularly once there are automated research assistants) and external auditors with privileged frontier model access (e.g., METR, Apollo, AISIs). This view seems particularly strongly held by proponents of the "AI control" metastrategy.

- Anecdotally, scholars seemed generally in favor of careers at an AISI or evals org, but would prefer to continue pursuing their current research agenda (which might be overdetermined given the large selection pressure they faced to get into MATS to work on that agenda).

- Starting new technical AI safety orgs/projects seems quite difficult in the current funding ecosystem. I know of many alumni who have founded or are trying to found projects who express substantial difficulties with securing sufficient funding.

Note that the career fair survey might tell us little about how likely scholars are to start new projects as it was primarily seeking interest in which organizations should attend, not in whether scholars should join orgs vs. found their own.

Can you estimate dark triad scores from the Big Five survey data?

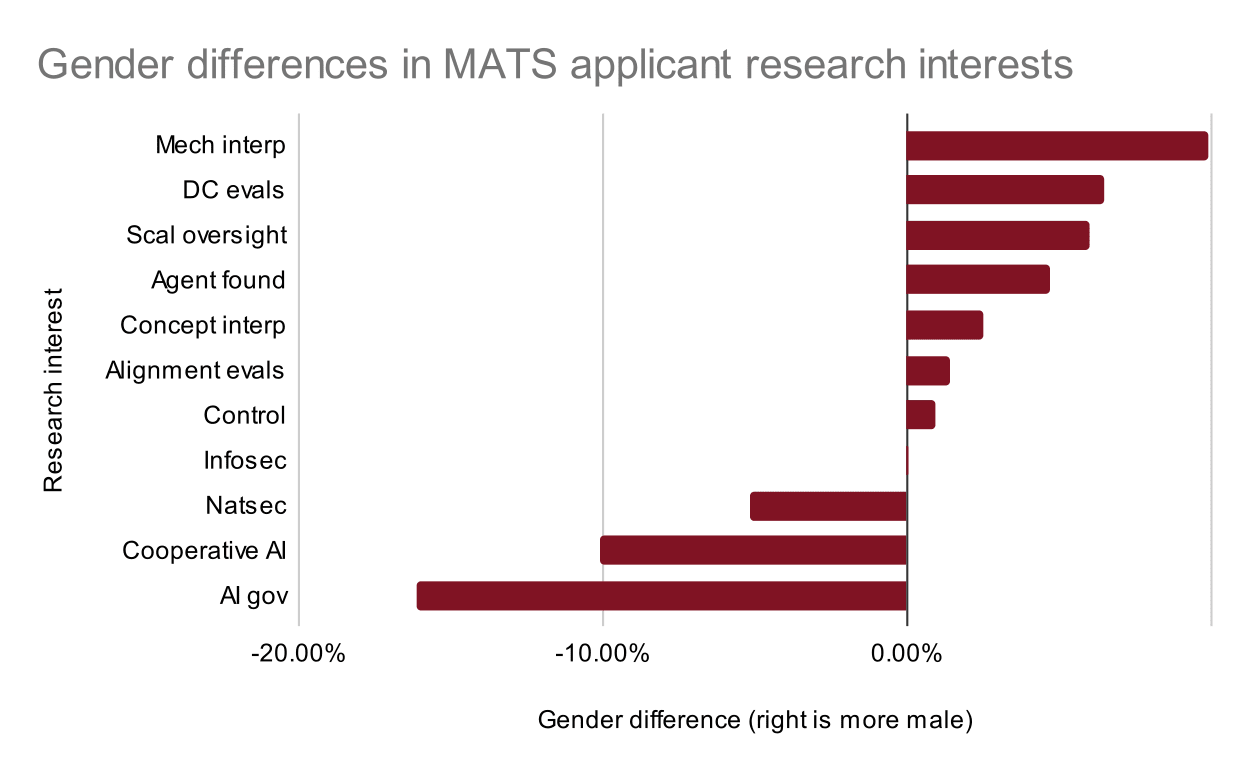

You might be interested in this breakdown of gender differences in the research interests of the 719 applicants to the MATS Summer 2024 and Winter 2024-25 Programs who shared their gender. The plot shows the difference between the percentage of male applicants who indicated interest in specific research directions from the percentage of female applicants who indicated interest in the same.

The most male-dominated research interest is mech interp, possibly due to the high male representation in software engineering (~80%), physics (~80%), and mathematics (~60%). The most female-dominated research interest is AI governance, possibly due to the high female representation in the humanities (~60%). Interestingly, cooperative AI was a female-dominated research interest, which seems to match the result from your survey where female respondents were less in favor of "controlling" AIs relative to men and more in favor of "coexistence" with AIs.

This is potentially exciting news! You should definitely visit the LISA office, where many MATS extension program scholars are currently located.

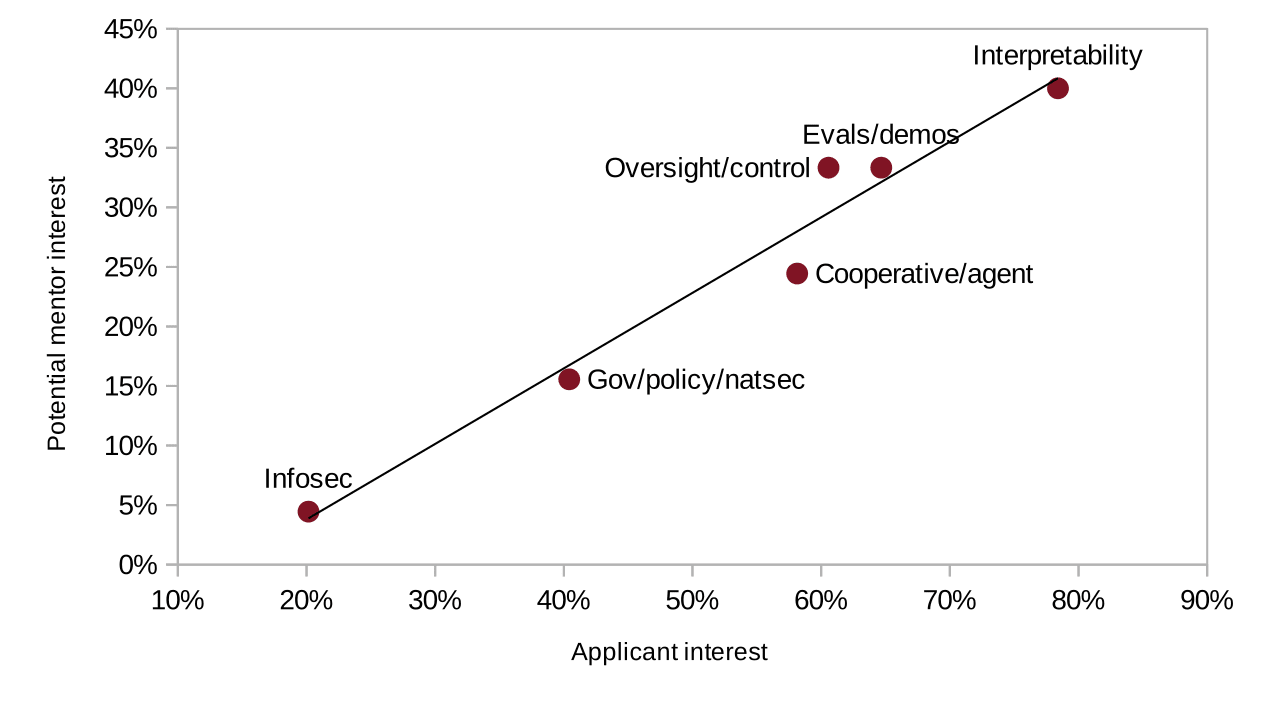

Last program, 44% of scholar research was on interpretability, 18% on evals/demos, 17% on oversight/control, etc. In summer, we intend for 35% of scholar research to be on interpretability, 17% on evals/demos, 27% on oversight/control, etc., based on our available mentor pool and research priorities. Interpretability will still be the largest research track and still has the greatest interest from potential mentors and applicants. The plot below shows the research interests of 1331 MATS applicants and 54 potential mentors who have applied for our Summer 2024 or Winter 2024-25 Programs.

Oh, I think we forgot to ask scholars if they wanted Microsoft at the career fair. Is Microsoft hiring AI safety researchers?

Thank you so much for conducting this survey! I want to share some information on behalf of MATS:

- In comparison to the AIS survey gender ratio of 9 M:F, MATS Winter 2023-24 scholars and mentors were 4 M:F and 12 M:F, respectively. Our Winter 2023-24 applicants were 4.6 M:F, whereas our Summer 2024 applicants were 2.6 M:F, closer to the EA survey ratio of 2 M:F. This data seems to indicate a large recent change in gender ratios of people entering the AIS field. Did you find that your AIS survey respondents with more AIS experience were significantly more male than newer entrants to the field?

- MATS Summer 2024 applicants and interested mentors similarly prioritized research to "understand existing models", such as interpretability and evaluations, over research to "control the AI" or "make the AI solve it", such as scalable oversight and control/red-teaming, over "theory work", such as agent foundations and cooperative AI (note that some cooperative AI work is primarily empirical).

- The forthcoming summary of our "AI safety talent needs" interview series generally agrees with this survey's findings regarding the importance of "soft skills" and "work ethic" in impactful new AIS contributors. Watch this space!

- In addition to supporting core established AIS research paradigms, MATS would like to encourage the development of new paradigms. For better or worse, the current AIS funding landscape seems to have a high bar for speculative research into new paradigms. Has AE Studios considered sponsoring significant bounties or impact markets for scoping promising new AIS research directions?

- Did survey respondents mention how they proposed making AIS more multidisciplinary? Which established research fields are more needed in the AIS community?

- Did EAs consider AIS exclusively a longtermist cause area, or did they anticipate near-term catastrophic risk from AGI?

- Thank you for the kind donation to MATS as a result of this survey!

Of the scholars ranked 5/10 and lower on value alignment, 63% worked with a mentor at a scaling lab, compared with 27% of the scholars ranked 6/10 and higher. The average scaling lab mentors rated their scholars' value alignment at 7.3/10 and rated 78% of their scholars at 6/10 and higher, compared to 8.0/10 and 90% for the average non-scaling lab mentor. This indicates that our scaling lab mentors were more discerning of value alignment on average than non-scaling lab mentors, or had a higher base rate of low-value alignment scholars (probably both).

I also want to push back a bit against an implicit framing of the average scaling lab safety researcher we support as being relatively unconcerned about value alignment or the positive impact of their research; this seems manifestly false from my conversations with mentors, their scholars, and the broader community.