Philosophy PhD student, worked at AI Impacts, then Center on Long-Term Risk, then OpenAI. Quit OpenAI due to losing confidence that it would behave responsibly around the time of AGI. Not sure what I'll do next yet. Views are my own & do not represent those of my current or former employer(s). I subscribe to Crocker's Rules and am especially interested to hear unsolicited constructive criticism. http://sl4.org/crocker.html

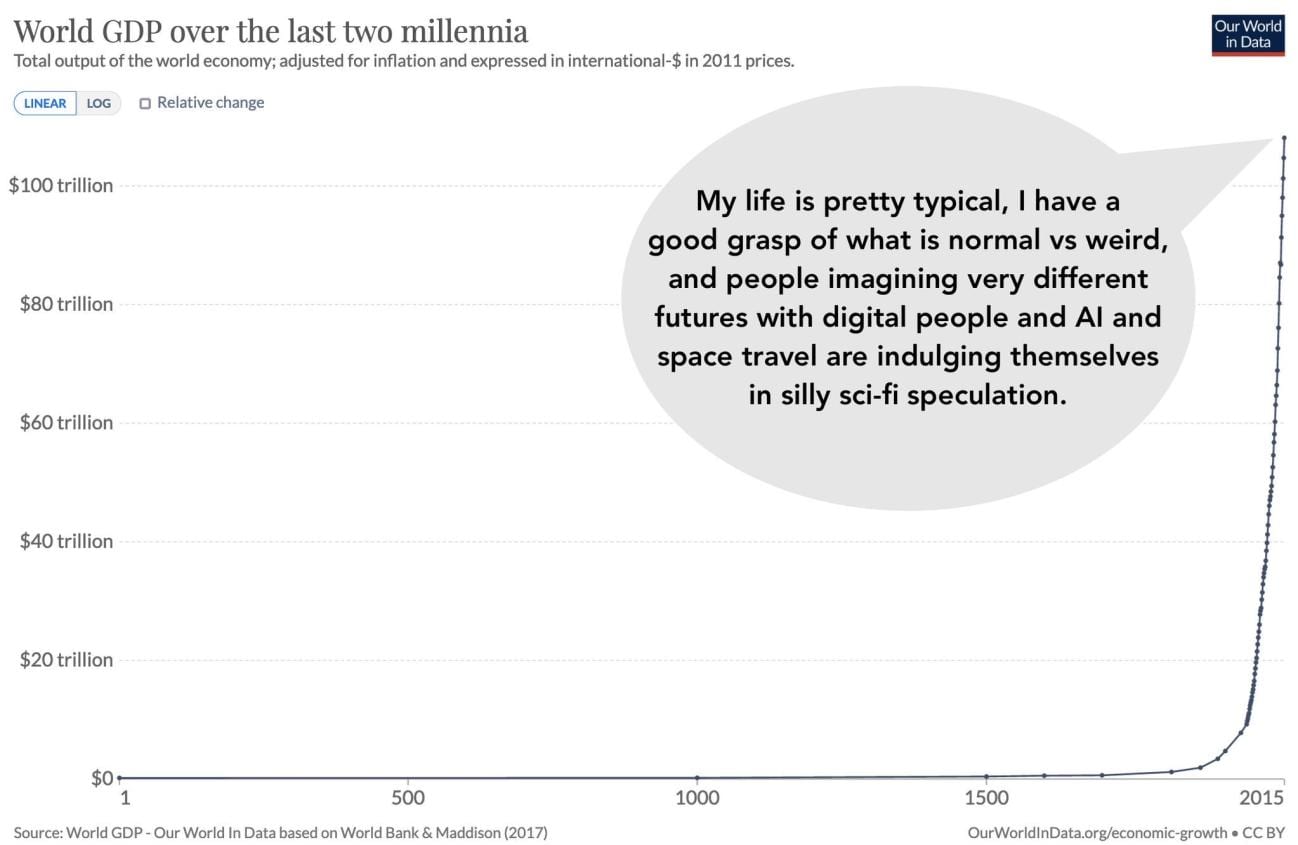

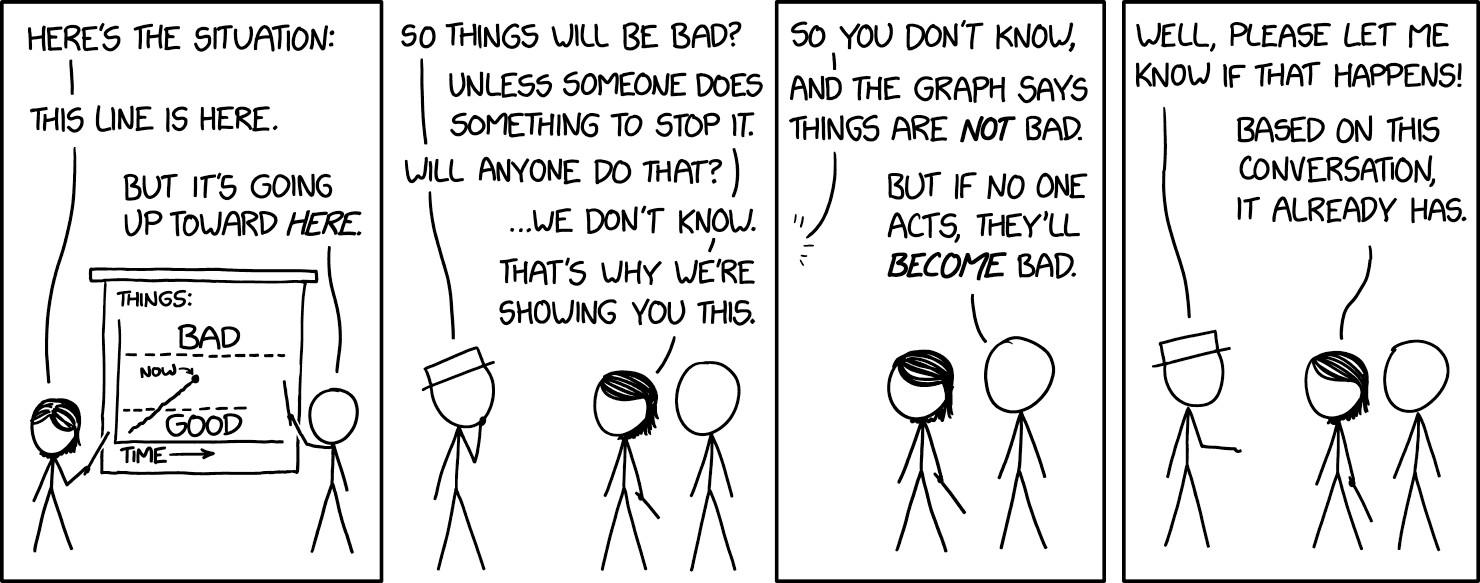

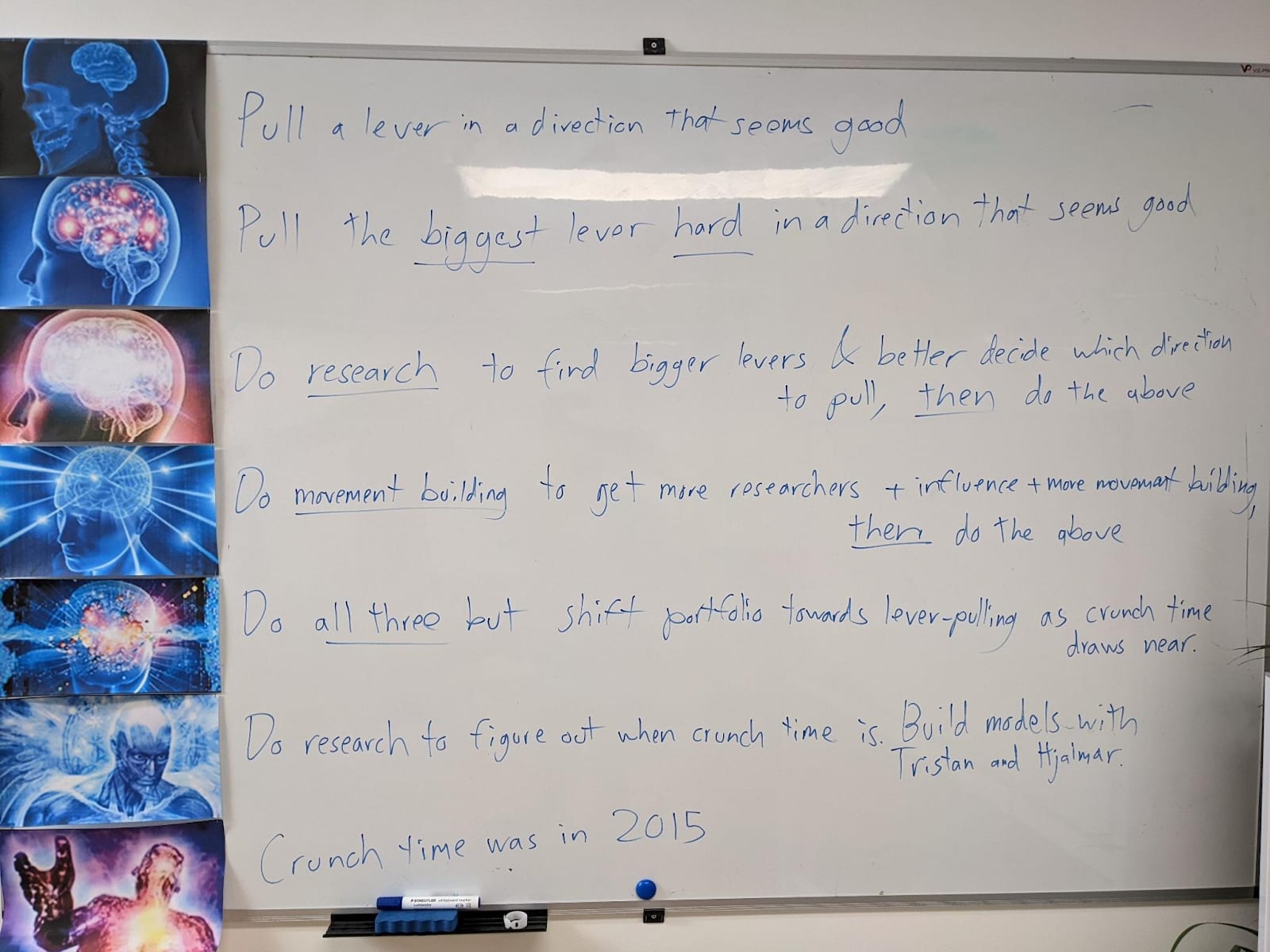

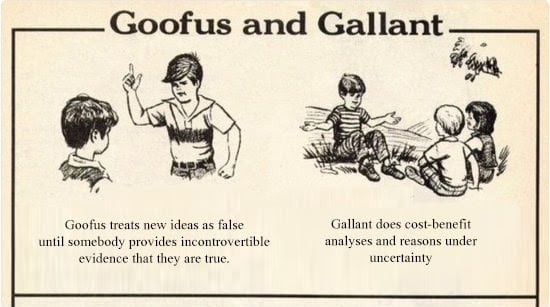

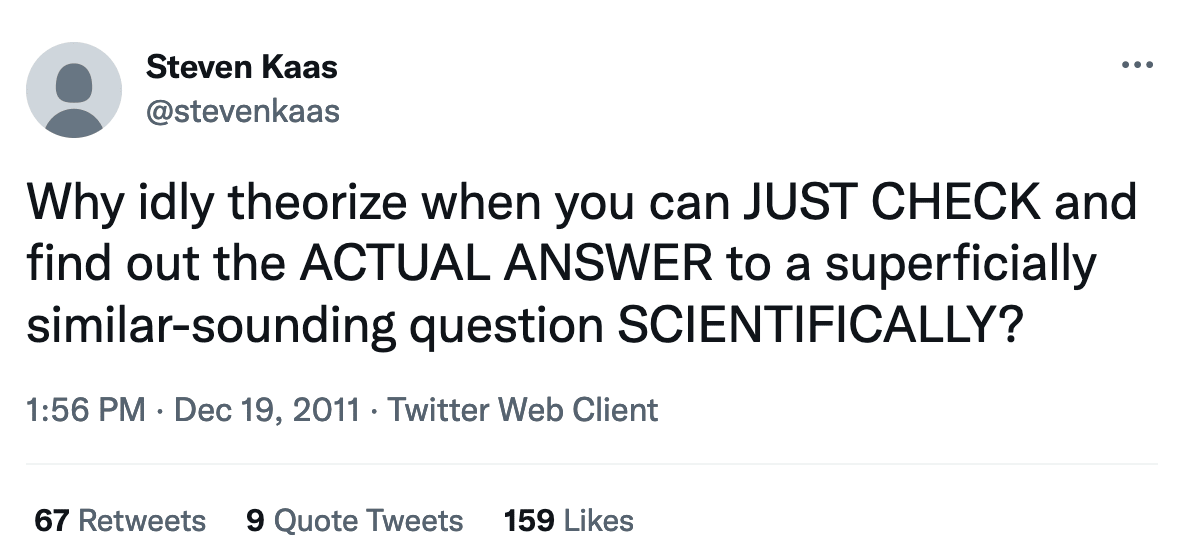

Some of my favorite memes:

(by Rob Wiblin)

(xkcd)

My EA Journey, depicted on the whiteboard at CLR:

(h/t Scott Alexander)

Posts

Wiki Contributions

Comments

Gotcha. A tough situation to be in.

What about "Keep studying and learning in the hopes that (a) I'm totally wrong about AGI timelines and/or (b) government steps in and prevents AGI from being built for another decade or so?"

What about "Get organized, start advocating to make b happen?"

- Yep. Or if we wanna nitpick and be precise, better than the best humans at X, for all cognitive tasks/skills/abilities/jobs/etc. X.

- >50%.

I think that's a great answer -- assuming that's what you believe.

For me, I don't believe point 3 on the AI timelines -- I think AGI will probably be here by 2029, and could indeed arrive this year. And even if it goes well and humans maintain control and we don't get concentration-of-power issues... the software development skills your students are learning will be obsolete, along with almost all skills.

In general, Llama 3 70B is a competent agent with appropriate scaffolding, and Llama 3 8B also has decent performance.

I'm curious about, and skeptical of, this claim. If you set it up in an Auto-GPT-esque scaffold with connections to the internet and ability to edit docs and make forum comments and emails and so forth, and set it loose with some long-term goal like "accumulate money" or "befriend people" or whatever... does it actually chug along for hours and hours moving vaguely in the right direction, or does it e.g. get stuck pretty quickly or go into some sort of confused doom spiral?

The latter. Yeah idk whether the sacrifice was worth it but thanks for the support. Basically I wanted to retain my ability to criticize the company in the future. I'm not sure what I'd want to say yet though & I'm a bit scared of media attention.

To clarify: I did sign something when I joined the company, so I'm still not completely free to speak (still under confidentiality obligations). But I didn't take on any additional obligations when I left.

Unclear how to value the equity I gave up, but it probably would have been about 85% of my family's net worth at least. But we are doing fine, please don't worry about us.

Fair enough.

What do you mean "of this type?" Why not just say "laws" full stop? What type are you referring to?

Do you have any sense of whether or not the models thought they were in a simulation?

I feel like this should be a top-level post.