Posts

Wiki Contributions

Comments

For comparing CE-difference (or the mean reconstruction score), did these have similar L0's? If not, it's an unfair comparison (higher L0 is usually higher reconstruction accuracy).

Seems tangential. I interpreted loss recovered is CE-related (not reconstruction related).

Could you go into more details on how this would work? For example, Sam Altman wants to raise more money, but can't raise as much since Claude-3 is better. So he waits to raise more money after releasing GPT-5 (so no change in behavior except when to raise money).

If you argue releasing GPT-5 sooner, that time has to come from somewhere. For example, suppose GPT-4 was release ready by February, but they wanted to wait until Pi day for fun. Capability researchers are still researching capabilities in the meantime regardless ff they were pressured & instead relased 1 month earlier.

Maybe arguing that earlier access allows more API access so more time finagling w/ scaffolding?

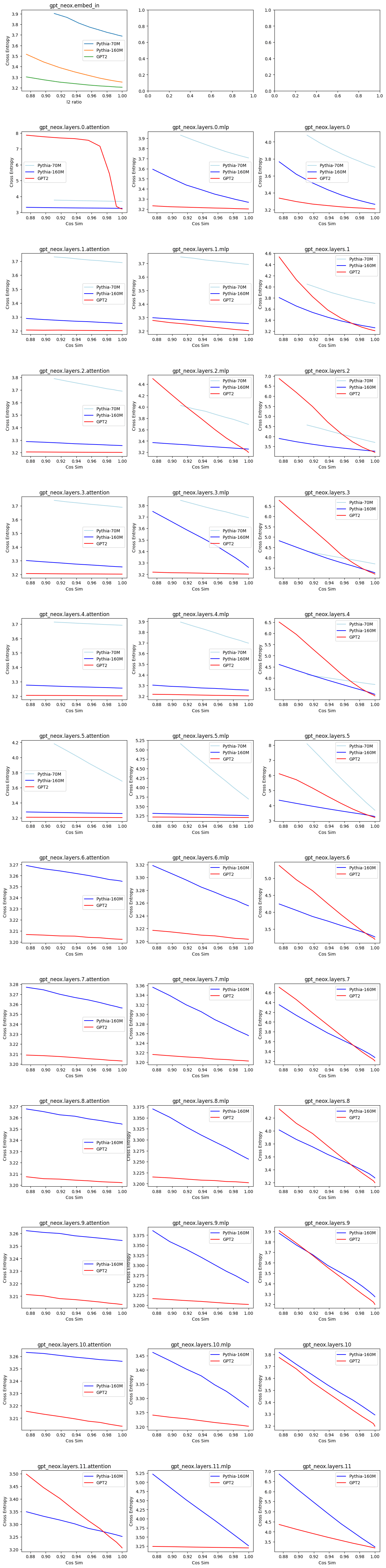

I've only done replications on the mlp_out & attn_out for layers 0 & 1 for gpt2 small & pythia-70M

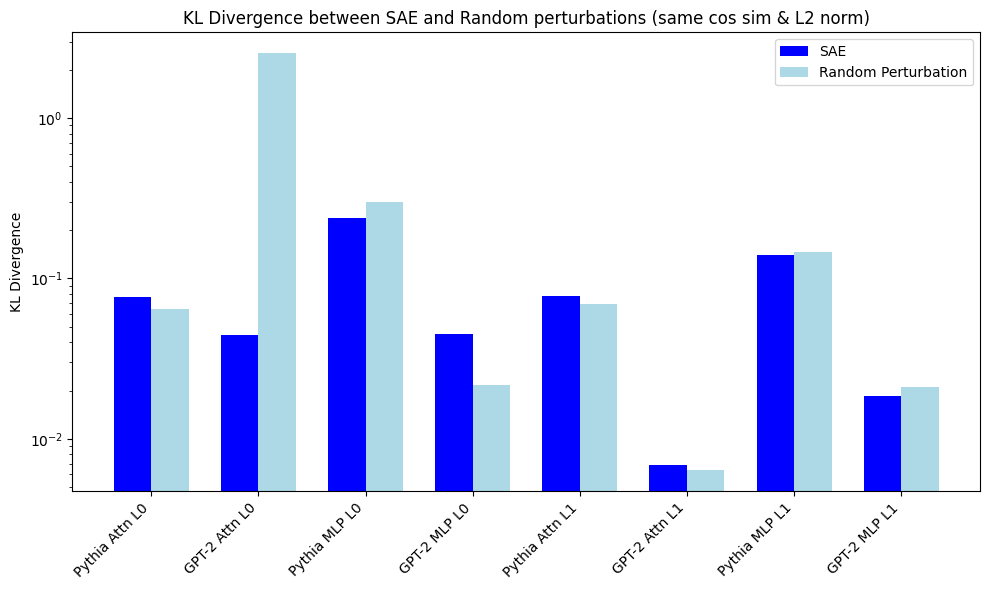

I chose same cos-sim instead of epsilon perturbations. My KL divergence is log plot, because one KL is ~2.6 for random perturbations.

I'm getting different results for GPT-2 attn_out Layer 0. My random perturbation is very large KL. This was replicated last week when I was checking how robust GPT2 vs Pythia is to perturbations in input (picture below). I think both results are actually correct, but my perturbation is for a low cos-sim (which if you see below shoots up for very small cos-sim diff). This is further substantiated by my SAE KL divergence for that layer being 0.46 which is larger than the SAE you show.

Your main results were on the residual stream, so I can try to replicate there next.

For my perturbation graph:

I add noise to change the cos-sim, but keep the norm at around 0.9 (which is similar to my SAE's). GPT2 layer 0 attn_out really is an outlier in non-robustness compared to other layers. The results here show that different layers have different levels of robustness to noise for downstream CE loss. Combining w/ your results, it would be nice to add points for the SAE's cos-sim/CE.

An alternative hypothesis to yours is that SAE's outperform random perturbation at lower cos-sim, but suck at higher-cos-sim (which we care more about).

Throughout this post, I kept thinking about Soul-Making dharma (which I'm familier with, but not very good at!)

AFAIK, it's about building up the skill of having a full body awareness (ie instead of the breath at the nose as an object, you place attention on the full body + some extra space, like your "aura") which gives you a much more complete information about the felt sense of different things. For example, when you think of different people, they have different "vibes" that come up as physical sense in the body which you can access more fully by paying attention to full body awareness.

The teachers then went on a lot about sacredness & beauty, which seemed most relevant to attunement (although I didn't personally practice those methods due to lack of commitment)

However, having full-body awareness was critical for me to have any success in any of the soul-making meditation methods & is mentioned as a pre-requisite for the course. Likewise, attunement may require skills in feeling your body/ noticing felt senses.

Agreed. You would need to change the correlation code to hardcode feature correlations, then you can zoom in on those two features when doing the max cosine sim.

Hey! Thanks for doing this research.

Lee Sharkey et al did a similar experiment a while back w/ much larger number of features & dimensions, & there were hyperaparameters that perfectly reconstructed the original dataset (this was as you predicted as N increases).

Hoagy still hosts a version of our replication here (though I haven't looked at that code in a year!).

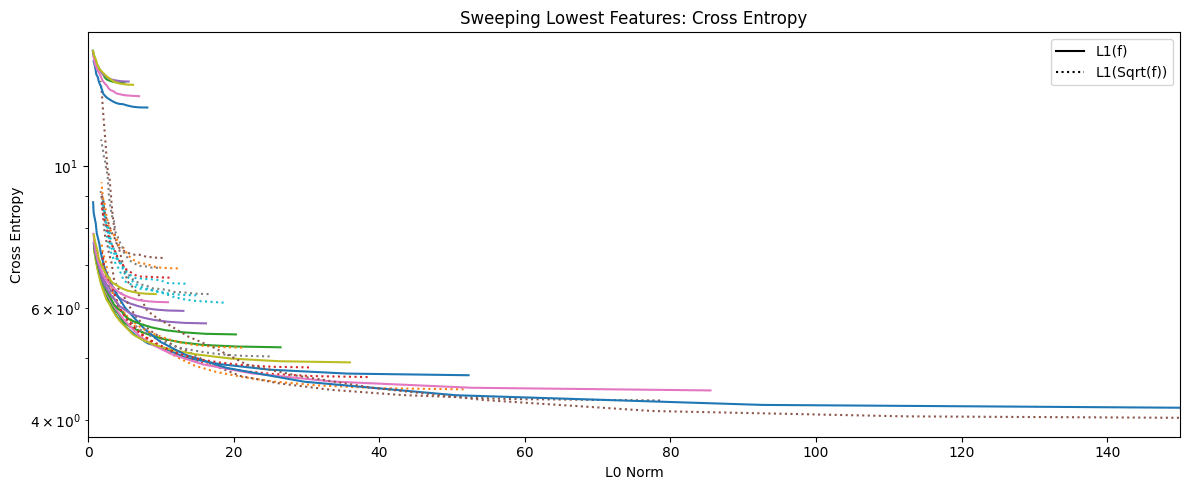

Yep, there are similar results when evaluating on the Pile with lower CE (except at the low L0-end)

Thanks for pointing this out! I'll swap the graphs out w/ their Pile-evaluated ones when it runs [Updated: all images are updated except the one comparing the 4 different "lowest features" values]

We could also train SAE's on Pythia-70M (non-deduped), but that would take a couple days to run & re-evaluate.

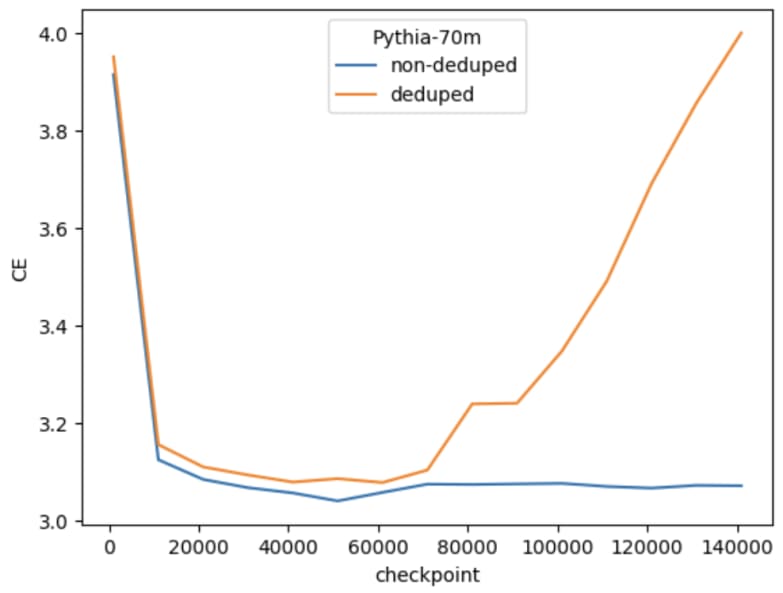

There actually is a problem with Pythia-70M-deduped on data that doesn't start at the initial position. This is the non-deduped vs deduped over training (Note: they're similar CE if you do evaluate on text that starts on the first position of the document).

We get similar performing SAE's when training on non-deduped (ie the cos-sim & l2-ratio are similar, though of course the CE will be different if the baseline model is different).

However, I do think the SAE's were trained on the Pile & I evaluated on OWT, which would lead to some CE-difference as well. Let me check.

Edit: Also the seq length is 256.

Great work!

Did you ever run just the L0-approx & sparsity-frequency penalty separately? It's unclear if you're getting better results because the L0 function is better or because there are less dead features.

Also, a feature frequency of 0.2 is very large! 1/5 tokens activating is large even for positional (because your context length is 128). It'd be bad if the improved results are because polysemanticity is sneaking back in through these activations. Sampling datapoints across a range of activations should show where the meaning becomes polysemantic. Is it the bottom 10% (or 10% of max-activating example is my preferred method)